Home > Blog > Mixing > Mixing Insights

Audio phase plays a crucial role in shaping the quality and clarity of sound in music production, recording, and playback. This article delves into the complexities of audio phase, providing insight into its importance and offering practical tips for managing phase-related challenges to create a balanced and immersive listening experience.

Audio phase refers to the position of a waveform in time relative to another waveform or a reference point. In sound, phase is crucial for maintaining coherence, stereo imaging, and preventing cancellation or reinforcement of specific frequencies. Understanding phase is essential in audio processing, as it influences recording, mixing, and playback quality.

Discover how audio phase manipulation can unlock new dimensions in your music, creating unique and engaging sounds that captivate your audience. Master the art of phase control to prevent phase-related issues, ensuring pristine audio quality in your productions.

Table Of Contents

1. Definition Of Audio Phase

2. Phase Relationships In Audio Signals

3. Phase Cancellation And Constructive Interference

4. The Importance Of Phase Coherence

5. Phase Issues In Multi-Microphone Recordings

6. Proper Phase Alignment In Audio Mixing

7. The Role Of Phase In Audio Effects

8. Phase In Speaker Design And Room Acoustics

9. The Significance Of Phase In Audio Processing

1. Definition Of Audio Phase

Audio phase is a fundamental concept that significantly impacts the quality and characteristics of sound in various aspects of audio production.

In the context of audio signals, phase represents the position of a waveform in time relative to another waveform or a reference point. It is typically measured in degrees, with a complete cycle of a waveform representing 360 degrees.

The phase relationship between two waveforms determines how they interact with each other when combined, which can lead to constructive or destructive interference.

Constructive interference occurs when waveforms are in-phase, causing an increase in amplitude, while destructive interference, or phase cancellation, happens when waveforms are out-of-phase, resulting in a reduction or complete loss of specific frequencies.

Understanding audio phase is crucial for audio engineers and producers, as it impacts recording, mixing, and playback quality, and can help avoid unwanted phase-related issues in audio production.

2. Phase Relationships In Audio Signals

In an audio waveform, the peaks and troughs represent the highest and lowest points of the waveform’s amplitude, respectively. The phase of an audio waveform refers to the position of these peaks and troughs in time relative to a reference point or another waveform.

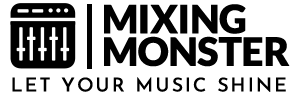

In audio signals, various phase relationships can impact the quality and characteristics of sound significantly. Here are some common phase relationships and their effects on audio quality:

Phase Relationships In Audio Signals:

- In-Phase:

When two or more audio signals have the same frequency and their peaks and troughs align perfectly, they are considered in-phase. In this relationship, the signals constructively interfere with each other, resulting in an increase in amplitude. The combined signal will be louder, and the audio quality will remain clear and intact. - Out-Of-Phase:

When the peaks of one audio signal align with the troughs of another, they are considered out-of-phase or 180 degrees out of phase. In this relationship, the signals destructively interfere with each other, leading to phase cancellation.

This can result in a loss of specific frequencies, a hollow or thin sound, or even complete silence in extreme cases. Out-of-phase signals can cause a loss of audio quality, especially in stereo recordings, where it can negatively affect the spatial perception of the sound. - Phase Shift:

A phase shift occurs when two audio signals have the same frequency but are not perfectly in-phase or out-of-phase. Instead, they have a varying degree of phase difference, such as 90 degrees or 270 degrees.

Phase shifts can cause partial phase cancellation or reinforcement, which can affect the audio quality by altering the frequency balance or creating comb filtering effects. Comb filtering can result in a series of notches or peaks in the frequency response, leading to a coloration or alteration of the original sound.

Knowing and managing these phase relationships are crucial for audio professionals to maintain audio quality and avoid unwanted phase-related issues in music production, recording, mixing, and live sound reinforcement.

3. Phase Cancellation And Constructive Interference

Phase cancellation and constructive interference are phenomena that occur when two or more audio signals with the same frequency are combined. Like already mentioned, these interactions can result in either a loss or reinforcement of specific frequencies, significantly impacting the overall audio quality.

- Phase Cancellation:

Phase cancellation occurs when two audio signals are out-of-phase or 180 degrees out of phase. In this case, the peaks of one waveform align with the troughs of the other waveform. When combined, these signals destructively interfere with each other, leading to a reduction or complete loss of specific frequencies.

Example Of Phase Cancellation:

Imagine two microphones placed at different distances from a sound source, such as a guitar amplifier. The sound waves captured by the microphones will have different arrival times due to the varying distances.

When the recorded signals are combined during mixing, they may be partially or completely out-of-phase, causing phase cancellation. This can lead to a thin or hollow sound, as certain frequencies are reduced or entirely lost.

- Constructive Interference:

Constructive interference occurs when two or more audio signals are in-phase, meaning their peaks and troughs align perfectly. In this relationship, the signals constructively interfere with each other, resulting in an increase in amplitude and the reinforcement of specific frequencies.

Example Of Constructive Interference:

Consider a recording of a snare drum using two microphones: one placed above the drum to capture the attack and the other placed below the drum to capture the snares.

If the microphones are positioned and aligned correctly, ensuring the waveforms are in-phase when combined, the resulting audio signal will have a full, balanced sound with a clear attack and snare presence. In this case, constructive interference reinforces the desired frequencies, enhancing the overall audio quality.

Managing phase relationships to avoid phase cancellation and leverage constructive interference is vital for audio professionals to maintain audio quality and balance in various aspects of music production.

4. The Importance Of Phase Coherence

Phase coherence is a concept that refers to the consistency of phase relationships between multiple audio signals or channels, particularly in stereo or multi-channel audio systems.

It is essential for preserving the integrity of the audio signal, ensuring accurate spatial perception, and maintaining a balanced stereo image. In music production and recording, phase coherence is vital for several reasons:

Impacts Of Phase Coherence On Music Production:

- Stereo Imaging:

A coherent phase relationship between the left and right channels of a stereo recording helps create a precise stereo image, allowing listeners to perceive the correct placement and positioning of instruments and sound sources within the stereo field. If phase coherence is not maintained, the stereo image may become blurry, unstable, or suffer from positional inaccuracies. - Mono Compatibility:

Ensuring phase coherence between stereo channels is crucial for maintaining mono compatibility. When a stereo recording is summed to mono, in-phase signals will reinforce each other, while out-of-phase signals may cancel each other out, leading to a loss of specific frequencies or an imbalance in the audio signal. Mono compatibility is still crucial in various applications, such as radio broadcasting, public address systems, or when listening to music on a single speaker device. - Multi-Microphone Recordings:

When recording with multiple microphones, maintaining phase coherence helps prevent phase cancellation and other phase-related issues that can negatively impact audio quality. Proper microphone placement, time alignment, and polarity inversion are techniques that can be used to ensure phase coherence in multi-microphone recordings. - Mixing And Audio Processing:

During mixing, phase coherence becomes important when blending multiple audio tracks or applying audio processing techniques, such as equalization, compression, or time-based effects. Preserving phase coherence in the mix helps maintain audio clarity, balance, and accurate spatial perception.

Phase coherence plays a crucial role in music production and recording, as it helps maintain a balanced stereo image, accurate spatial perception, and overall audio quality. Audio engineers and producers must understand and manage phase relationships to ensure optimal results in their work.

5. Phase Issues In Multi-Microphone Recordings

When recording with multiple microphones it is very important to keep the concept of phase relationships in mind.

Phase problems can arise in multi-microphone recordings when two or more microphones capture the same sound source at different times or distances, leading to varying phase relationships between the recorded signals.

When these signals are combined during mixing, phase issues may occur, resulting in phase cancellation, comb filtering, or a loss of audio clarity and balance. Here are some suggestions for addressing phase problems in multi-microphone recordings:

How To Avoid Phase Problems In Multi-Microphone Recordings:

- Proper Mic Placement:

Careful microphone placement is crucial for minimizing phase issues. When using multiple microphones to capture the same sound source, follow the 3:1 rule, which states that the distance between microphones should be at least three times the distance between each microphone and the sound source. This helps reduce the level of phase interference between the microphones. - Coincident Or Near-Coincident Techniques:

Use coincident (e.g., X/Y) or near-coincident (e.g., ORTF, NOS) microphone techniques when recording stereo sources. In these configurations, microphones are placed close together or share the same axis, reducing phase differences between the signals and ensuring a more coherent stereo image. - Checking Phase Relationships:

During recording, monitor the phase relationships between microphone signals by listening to them in mono or using phase meters available in some digital audio workstations (DAWs). Make adjustments to the microphone placement or angles as needed to achieve the desired phase relationship. - Polarity Inversion:

In some cases, inverting the polarity of one microphone signal can help correct phase issues. Polarity inversion flips the waveform’s peaks and troughs, effectively shifting the phase by 180 degrees. This technique can be useful when dealing with signals that are close to being 180 degrees out of phase. - Time Alignment:

In post-production, phase issues can be addressed by adjusting the timing of audio tracks to align them more accurately. This can be done manually by shifting audio regions or using time-alignment tools and plugins available in most DAWs.

By implementing these techniques and being mindful of phase relationships, audio engineers and producers can minimize phase problems in multi-microphone recordings and ensure a clearer, more balanced audio quality in their work.

6. Proper Phase Alignment In Audio Mixing

Phase alignment is essential when mixing audio tracks because it ensures the consistency of phase relationships between various tracks, preventing phase cancellation, comb filtering, or other phase-related issues that can degrade the overall audio quality, clarity, and balance.

Here are some practical tips for achieving proper phase alignment while mixing:

How To Achieve Proper Phase Alignment In Audio Mixing:

- Examine Waveforms Visually:

Start by closely examining the waveforms of the audio tracks in your DAW. Look for any obvious phase discrepancies between similar tracks, such as kick drum samples from different sources or recordings of the same instrument captured by multiple microphones. Aligning the waveforms by adjusting the timing can help minimize phase issues. - Time Alignment Tools And Plugins:

Use time alignment tools and plugins available in most DAWs to automatically align audio tracks based on their phase relationships. These tools analyze the phase information in the audio signals and adjust the timing of the tracks accordingly to achieve better phase alignment. Some popular plugins include Sound Radix Auto-Align, Waves InPhase, and MeldaProduction MAutoAlign. - Monitor In Mono:

When checking phase alignment, periodically switch to mono monitoring. This helps identify phase issues more easily, as phase cancellation and comb filtering become more apparent in mono. Make necessary adjustments to the track timing or polarity and continue to monitor in mono to ensure proper phase alignment. - Use Polarity Inversion:

If two audio tracks are close to being 180 degrees out of phase, try inverting the polarity of one track. This effectively shifts the phase by 180 degrees and can help correct phase issues. Most DAWs provide a polarity inversion button or plugin to facilitate this process. - All-Pass Filters:

All-pass filters are designed to alter the phase of an audio signal without affecting its frequency response. These filters can be used to correct phase issues that occur at specific frequencies, especially when dealing with complex audio material. Some digital mixers and audio processors include all-pass filters as a built-in feature. - Linear Phase Equalizers:

Unlike traditional equalizers, which can introduce phase shifts as they modify the frequency response, linear phase equalizers maintain the phase relationships between frequencies. They can be used to correct frequency imbalances without introducing additional phase-related issues. Many DAWs and audio plugins offer linear phase equalizer options. - Phase Rotation Plugins:

Phase rotation plugins are designed to adjust the phase relationships between different frequency bands in an audio signal. These plugins can help correct phase issues that occur at specific frequency ranges or smooth out phase inconsistencies in complex audio material. Examples of phase rotation plugins include Little Labs IBP (In-Between Phase) and Waves InPhase. - Phase Correlation Meter:

Use a phase correlation meter, a tool available in many DAWs and audio plugins, to analyze the phase relationships between tracks in real time. A phase correlation meter typically displays values between -1 and 1, where positive values indicate in-phase signals, and negative values indicate out-of-phase signals. Adjust track timing or polarity based on the meter’s readings to achieve proper phase alignment.

By carefully managing phase relationships, you can achieve proper phase alignment when mixing audio tracks, resulting in a clearer, more balanced, and better-sounding mix.

7. The Role Of Phase In Audio Effects

Phase manipulation plays a crucial role in many audio effects, as it can create unique and engaging sounds by altering the phase relationships between different frequencies or copies of the same signal. Some popular audio effects that rely on phase manipulation include phasers, flangers, and chorus effects.

A phaser effect works by splitting an audio signal into two paths, leaving one path unprocessed and applying a series of all-pass filters to the other. The all-pass filters create phase shifts at specific frequencies, and when the processed and unprocessed signals are combined, they create notches or peaks in the frequency spectrum due to phase cancellation and constructive interference.

Modulating the all-pass filters with a low-frequency oscillator (LFO) results in a sweeping, spacey sound characteristic of the phaser effect.

A flanger effect is created by duplicating an audio signal, delaying the duplicate by a very short time (usually a few milliseconds), and varying the delay time using an LFO.

As the delay time changes, the phase relationships between the original and delayed signals also change, causing comb filtering, which produces a series of notches and peaks in the frequency spectrum. The result is a distinct, swirling sound reminiscent of a jet plane taking off or a “whooshing” effect.

A chorus effect simulates the natural variation in pitch and timing that occurs when multiple sources play the same sound simultaneously, such as in a choir or an ensemble of stringed instruments.

The effect is achieved by duplicating the audio signal, applying a slight pitch modulation and a short delay to the duplicate, and then combining it with the original signal. The phase relationship between the original and modulated signals constantly changes due to the pitch modulation and delay, creating a richer, fuller sound that mimics the characteristics of multiple sound sources.

Phase manipulation is a fundamental aspect of many audio effects, allowing engineers and producers to create unique and engaging sounds by altering the phase relationships between different frequencies or copies of the same signal.

8. Phase In Speaker Design And Room Acoustics

Phase plays a significant role in speaker design, speaker placement, and room acoustics, as it can greatly impact the overall listening experience. A proper understanding of phase relationships and their implications can help optimize speaker performance, ensure accurate sound reproduction, and enhance the listener’s experience.

In multi-driver speaker systems, such as those with separate woofers, midrange drivers, and tweeters, it is crucial to maintain proper phase relationships between the drivers.

If the drivers are not in-phase, the sound waves they produce may interfere with each other, leading to phase cancellation, comb filtering, or a loss of clarity and definition.

To address this issue, speaker designers use techniques like crossover network design, driver alignment, and time alignment to ensure that the drivers operate coherently and produce an accurate soundstage.

The placement of speakers in a room can significantly affect the phase relationships between the sound waves they produce and the room’s boundaries.

When sound waves reflect off walls, floors, and ceilings, they can interact with the direct sound from the speakers, causing phase-related issues, such as cancellations or reinforcements at specific frequencies.

To minimize these problems, it is essential to position speakers correctly, considering factors like the distance from walls, the height of the speakers, and the listener’s position.

The acoustics of a room can also influence the phase relationships of sound waves, affecting the overall listening experience. Room dimensions, surface materials, and the presence of furniture or other objects can cause reflections, standing waves, or flutter echoes, all of which can impact the phase coherence of the sound in the room.

To optimize room acoustics, techniques like acoustic treatment (e.g., absorption panels, bass traps, and diffusers) and room correction systems can be employed to control reflections and manage phase-related issues.

A coherent phase relationship between speakers and in the listening environment is essential for achieving a realistic and immersive listening experience. Proper phase alignment ensures accurate soundstage reproduction, precise imaging, and a balanced frequency response, allowing the listener to perceive the music or audio content as the producer or engineer intended.

9. The Significance Of Phase In Audio Processing

In conclusion, audio phase is a fundamental aspect of music production that demands careful attention and management to ensure optimal audio quality, clarity, and balance.

A comprehensive understanding of phase relationships, along with the appropriate use of phase correction tools and techniques, can significantly enhance the listening experience and contribute to the success of a production.

From multi-microphone recordings and mixing audio tracks to speaker design and room acoustics, phase plays a critical role in shaping the overall sound and spatial perception.

By diligently addressing phase-related challenges, audio engineers and producers can create engaging and immersive audio content that resonates with listeners and faithfully conveys the artistic intent of the creator.